A machine learning model with no input variables

...that made it difficult for me to understand a familiar concept

Wikipedia is not the best resource for Data Science and Machine Learning beginners. In contrast to academia, online courses, and books, it doesn't order the topics in a way that one builds upon another. Instead, we get unordered articles with a very high entry level.

However, at the current stage of my Data Science journey, almost 4 years after graduation, I found many Wikipedia articles in-depth and rich. At the same time, I usually struggle to break its strict way of explaining things, being frequently ashamed and dissatisfied.

Today, I will tell you my short story with the Likelihood function article on Wikipedia, from confusion to the joy of understanding and new insights.

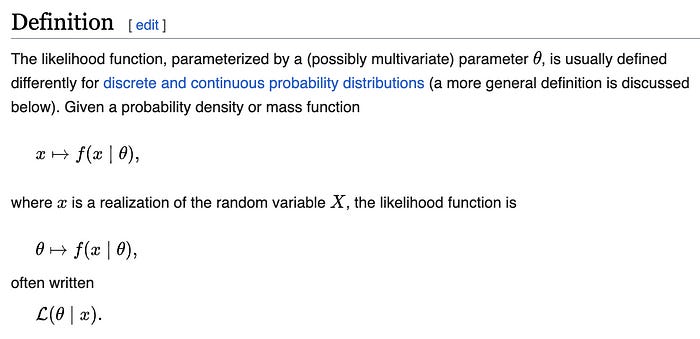

When reading through a Wikipedia article about the Likelihood function, I struggled to understand the given definition and example.

Something was missing there for me. I remember my Data Science classes, where we covered the Likelihood function when training neural networks for classification with negative log-likelihood as a loss function or inside a Naive Bayes model. Always in the context of some datasets like ancient diabetes or iris. I used to deal with input variables (like sepal width or length) to classify data. However, I was unable to match that experience with the formulas I saw in the Wikipedia article.

So I immediately went through the coin flip example (they say an example is worth a thousand words…), but it still seemed very unfamiliar to me.

After reading it several times and taking a moment to reflect, the fog finally cleared, and I understood what was going on.

In both the example and the provided definition, there are no input variables that I used to work with. And there’s absolutely nothing wrong with that! It’s a perfectly fine situation, but it was very awkward for me to get at first glance.

The thing is that the given model is a probability distribution. Or I should say, a random variable described by a probability distribution. It indeed has no inputs but can produce outputs.

From the Machine Learning perspective, the model in the example could take any form. Linear function, deep neural network, decision tree, probabilistic graphical model, basically anything that produces outputs! Not only a random variable.

The random variable part of the definition wasn’t clear to me because I incorrectly assumed it was somehow connected to the idea of the Likelihood itself, while it was actually not.

To better explain what I mean, I need to go step-by-step through the definition.

Let’s unravel two provided formulas. It starts with the “probability density or mass function” according to the article.

We can read this right arrow sign as “function f maps x values to outputs“ and its main goal is to introduce an assumption that θ is a fixed(constant) parameter while x remains adjustable.

Since the article says we have a random variable X, then x are all possible values of this random variable, and f is its probability density function.

The most trivial probability density function (PDF) that comes to my mind is the Normal Distribution. It takes exactly two parameters: mean (μ) and standard deviation (σ). Thus, the parameters set θ simply consists of μ and σ.

The chart shows an example of the f function - the Normal Distribution probability density function.

We use this function to say what the probability of sampling a value from a particular range from the distribution is.

In the second formula, we get to the point and see the definition of the Likelihood function:

which reads “function f maps parameters set θ to outputs” and introduces an absolutely crucial assumption that from now on, x is a fixed (constant) parameter and θ is adjustable.

At this stage, the article says, “x is a realization of the random variable X“, which basically means x is a value sampled from our distribution. Let’s say we really sampled a value using an online tool or a Python script, and we got 1.52. We treat x as a fixed, ground-truth value here and can’t change it. θ is what we can freely change in this formula.

Let’s select some specific θ, then. For our Normal Distribution, I pick mean μ = 1 and standard deviation σ = 0.5, whatever. For these parameter values, the probability density of sampling 1.52 is equal to 0.46. (Please notice I use the term probability density because the probability itself is an area under the curve for continuous distributions.)

And that’s it. This is how the likelihood works. Given an observed value, we select a parameters set, and the likelihood says what is the probability of sampling this value.

The most useful thing I’m aware of we can use this for is to find what distribution parameters θ are most likely to produce the sampled value. By trying many different mean and standard deviation values, we will find the ones that are most likely to generate our sampled value 1.52. This task is called maximum likelihood estimation (MLE).

Noooooow, the best thing is, our function f from the definition can be a Probability Density Function or Probability Mass Function (for discrete distributions) describing not only a random variable. From the machine learning perspective, it can describe any model we can think of. So let’s make things more sexy now and pick a Large Language Model (LLM) as an example.

X now becomes our LLM. A small x from the formula becomes a data sample the LLM works with. And since LLMs are trained to predict the next token in a sequence, our x becomes a tuple (“Data Science is”, ”fun“), where the first sequence is input to the model, and the second word is an expected output. The full sequence “Data Science is fun“ is our ground-truth observation scraped from the internet or maybe found in a book.

LLMs are neural networks with parameters set θ consisting of weights and biases. When feeding the model with our input “Data Science is” we will get probabilities of all the possible completion tokens in the model’s dictionary. Let’s say we work with some state-of-the-art model found on the web, and it assigns a probability of 0.8 to the “fun“ token. Good.

We can change the weights and the biases in some way, pass the input sequence “Data Science is” through the model again, and see if the probability of sampling “fun“ has increased. At the highest level of abstraction, this is how language models learn. Yeah.

From now on, I’m able to split the model being a random variable from the concept of the likelihood itself to understand both the Wikipedia’s definition and the example.

And I could've stopped here here, but my mind ran further. I swiftly categorized the potential models into two groups. Deterministic and non-deterministic ones. Deterministic models usually have some kind of input variables because when they don’t, they become constant functions, which is not very useful…

Non-deterministic models introduce some randomness. They can take no inputs, like the coin flip in Wikipedia’s example. However, we can mix them with anything from basic math operations on input variables to large operation graph modules. For example, Variational Auto Encoders consist of a deterministic encoder, decoder, and some normal distribution sampling in the middle.

That’s it. I will have more respect for the coin flips now and be aware that even the ordinary coin is a serious machine-learning model…

We’ll see if this new point of view will help me to get through more articles quickly. I hope it will for you. Cheers!